Stochastic Variational Contrastive Clustering Autoencoder (SVCCA)

This project introduces a hybrid unsupervised deep learning model that integrates Variational Autoencoding, Contrastive Representation Learning, and Bayesian Deep Clustering into one unified framework. Implemented in PyTorch, SVCCA learns meaningful, uncertainty-aware latent representations from unlabeled data such as MNIST. The model captures both the generative capacity of VAEs and the discriminative structure of contrastive embeddings while quantifying confidence through a Dirichlet-based clustering head. By combining generative fidelity, semantic alignment, and calibrated uncertainty, SVCCA bridges the gap between deterministic clustering and probabilistic representation learning.

7

Tech Stack

5

Key Features

Technologies Used

PyTorch

NumPy

Matplotlib

Jupyter Notebook

Adam Optimizer

MNIST Dataset

Google Colab (GPU)

1 / 5

Key Features

- ●Hybrid Variational–Contrastive Architecture that fuses generation, self-supervision, and probabilistic clustering.

- ●Uncertainty-Aware Clustering using a Dirichlet prior, offering soft, calibrated assignments instead of hard labels.

- ●Contrastive Consistency enforced via NT-Xent loss, aligning stochastic embeddings across augmented views.

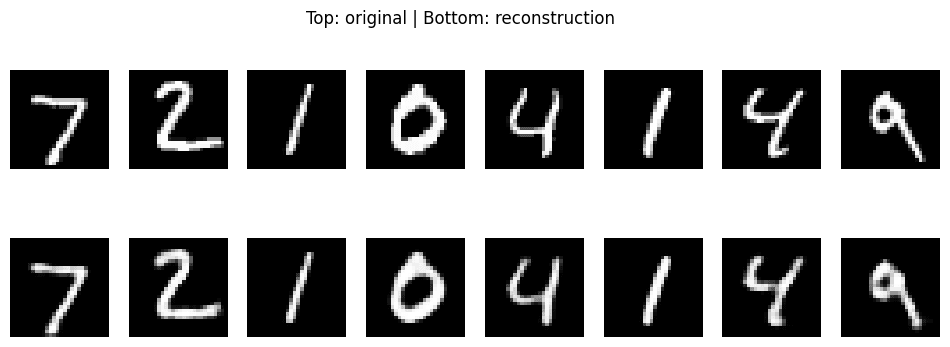

- ●High-Fidelity Reconstructions that preserve digit structure while improving cluster separability.

- ●Interpretable Latent Space Visualizations showing semantically organized clusters with measurable entropy.